Assessing the Role of Virtual Reality with Passive Haptics in Music Conductor Education: A Pilot Study

In Proceedings of the 2020 Human-Computer Interaction International Conference, J. Y. C. Chen and G. Fragomeni (Eds.), LNCS, vol. 12190, July 19-24, 2020, pp. 275-285. https://doi.org/10.1007/978-3-030-49695-1_18

Funded by: College of the Arts 2020 Research Incentive Award

Description

This paper presents a novel virtual reality system that offers immersive experiences for instrumental music conductor training. The system utilizes passive haptics that bring physical objects of interest, namely the baton and the music stand, within a virtual concert hall environment. Real-time object and finger tracking allow the users to behave naturally on a virtual stage without significant deviation from the typical performance routine of instrumental music conductors. The proposed system was tested in a pilot study (n=13) that assessed the role of passive haptics in virtual reality by comparing our proposed “smart baton” with a traditional virtual reality controller. Our findings indicate that the use of passive haptics increases the perceived level of realism and that their virtual appearance affects the perception of their physical characteristics.

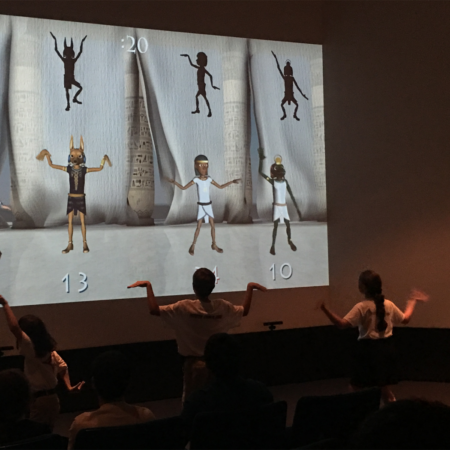

The use of computer systems in instrumental music conductor education has been a well studied topic even outside the area of virtual reality [1]. Several systems have been proposed that offer targeted learning experiences [2,3] which may also combine gamified elements [6]. In the past decades, several visual interfaces have been designed using the available technologies at each given period of time [4,5,7], which most recently included eye tracking [8] and augmented and virtual reality platforms [3].

Recent advances in real-time object tracking and the availability of such systems as mainstream consumer products has opened new possibilities for virtual reality applications [13, 14,]. It has been shown that the use of passive haptics in VR contribute to a sensory-rich experience [15,16], as users have now the opportunity to hold and feel the main objects of interaction within a given immersive environment, such as tools, handles, and other instruments. For example, tracking the location of a real piano can help beginners learn how to play it using virtual reality [20]. However, the use of passive haptics in virtual environments for music education is an understudied area, because it requires precise real-time tracking of objects that are significantly smaller than a piano, such as hand held musical instruments, bows, batons, etc.

In this paper, we present a novel system for enhancing the training of novice instrumental music conductors through a tangible virtual environment. For the purposes of the proposed system a smart baton and a smart music stand have been designed using commercially available tracking sensors (VIVE trackers). The users wear a high-fidelity virtual reality headset (HTC VIVE), which renders the environment of a virtual concert hall from the conductor’s standpoint. Within this environment, the users can feel the key objects of interaction within their reach, namely the baton, the music stand, and the floor of the stage through passive haptics. A real-time hand and finger motion tracking system continuously tracks the left hand of the user in addition to the tracking of the baton, which is usually held in the right hand. This setup creates a natural user interface that allows the conductors to perform naturally on a virtual stage, thus creating a highly immersive training experience.

The main goals of the proposed system are the following: a) Enhance the traditional training of novice instrumental music conductors by increasing their practice time without requiring additional space allocation or time commitment from music players, which is also cost-effective. b) Provide an interface for natural user interaction that does not deviate from the traditional environment of conducting, including the environment, the tools, and the user behavior (hand gesture, head pose, and body posture), thus making the acquired skills highly transferable to the real-life scenario. c) Just-in-time feedback is essential in any educational setting, therefore one of the goals of the proposed system is to generate quantitative feedback on the timeliness of their body movement and the corresponding music signals. d) Last but not least, the proposed system recreates the conditions of a real stage performance, which may help the users reduce stage fright within a risk-free virtual environment [9,10,11,12].

A small scale pilot study (n=13) was performed in order to assess the proposed system and particularly the role of passive haptics in this virtual reality application. The main focus of the study was to test whether the use of passive haptics increases the perceived level of realism in comparison to a typical virtual reality controller, and whether the virtual appearance of a real physical object, such as the baton, affects the perception of its physical characteristics. These hypotheses were tested using A/B tests followed by short surveys. The statistical significance of the collected data was calculated, and the results are discussed in detail. The reported findings support our hypotheses and set the basis for a larger-scale future study.

Additional information

| Author | Barmpoutis, Angelos, Faris, Randi, Garcia, Luis, Gruber, Luis, Li, Jingyao, Peralta, Fray, Zhang, Menghan |

|---|---|

| Journal | In Proceedings of the 2020 Human-Computer Interaction International Conference, J. Y. C. Chen and G. Fragomeni (Eds.), LNCS |

| Volume | 12190 |

| Month | July 19-24 |

| Year | 2020 |

| DOI | |

| Funding | College of the Arts 2020 Research Incentive Award |

| Pages | 275-285 |

Citation

Citation

BibTex

@article{digitalWorlds:219,

doi = {https://doi.org/10.1007/978-3-030-49695-1_18},

author = {Barmpoutis, Angelos and Faris, Randi and Garcia, Luis and Gruber, Luis and Li, Jingyao and Peralta, Fray and Zhang, Menghan},

title = {Assessing the Role of Virtual Reality with Passive Haptics in Music Conductor Education: A Pilot Study},

journal = {In Proceedings of the 2020 Human-Computer Interaction International Conference, J. Y. C. Chen and G. Fragomeni (Eds.), LNCS},

month = {July 19-24},

volume = {12190},

year = {2020},

pages = {275-285}

}